The ChipIr beamline has been in operation since 2017 and has supported experiments in a range of scientific fields, including those that are testing the reliability of new AI systems that could become part of our everyday life. In a recent article, published in IEEE Transactions on Nuclear Science, Paolo Rech, Associate Professor at the University of Trento, a regular user of the ChipIr beamline, reviewed the challenges and opportunities of testing AI accelerators using neutrons.

Machine Learning is among the greatest advancements in computer science and engineering and is used to identify patterns or objects within data or frames, a key feature in autonomous vehicles or robots. In a neural network, the designer can train (rather than program) a Machine Learning model to perceive objects in the input image and make decisions from hidden information within the data, enabling them to solve problems normally considered impossible with traditional programming languages. Since neural networks are heavily used in automotive and aerospace applications, where safety is paramount, their reliability is vital.

However, the reliability of these systems is extremely challenging due to the complexity of the software, which is composed of hundreds of layers, and of the underlying hardware, which exploits parallel architecture to ensure real-time inference.

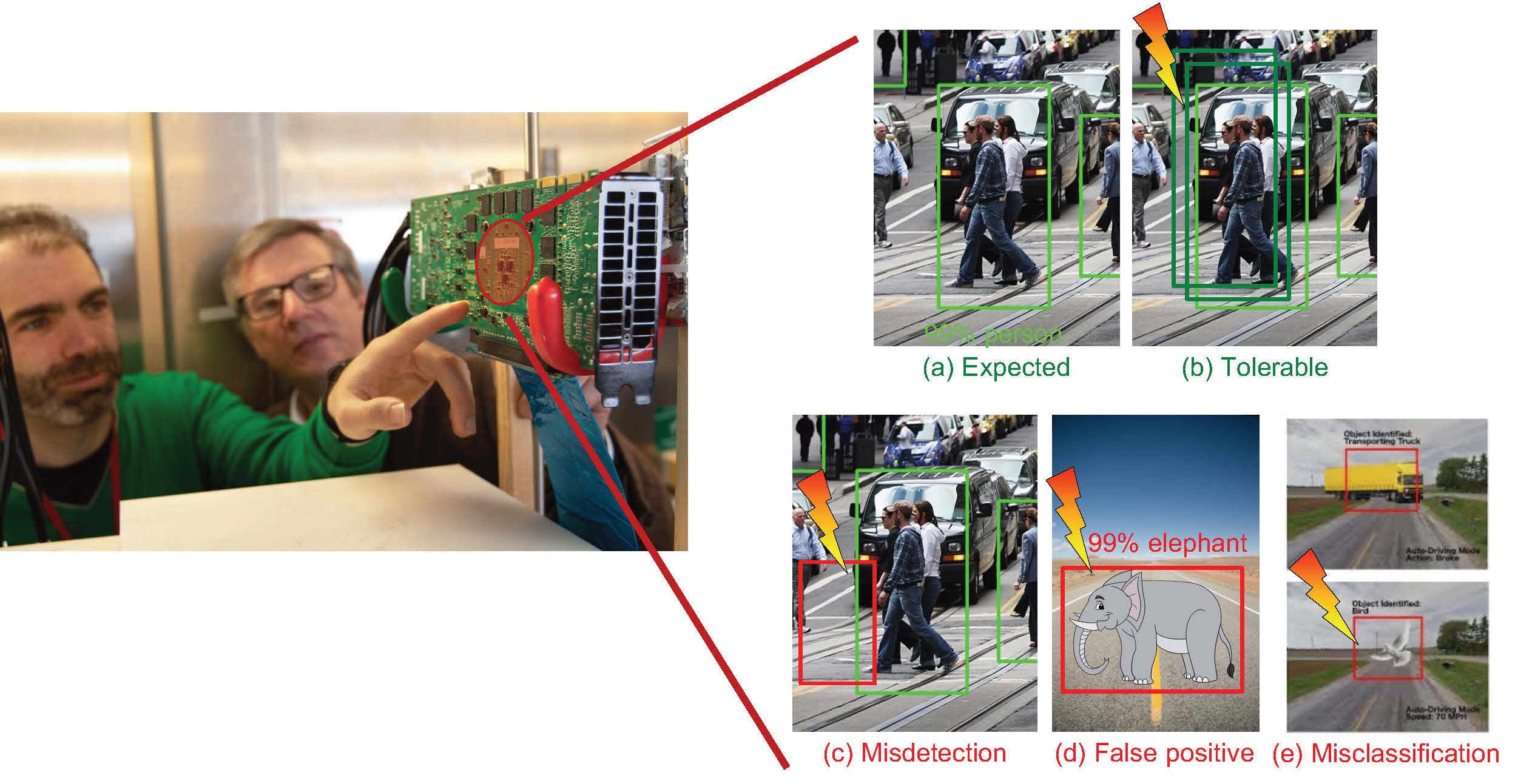

Machine Learning is a subset of Artificial Intelligence (AI), consisting of algorithms which learn to improve their accuracy as they are exposed to additional data. The most interesting type of Machine Learning is Deep Learning (DL), in which several processing layers are used to extract intrinsic details from the input data. The resulting Deep Neural Networks (DNNs) allow us to find patterns in this data, such as identifying objects within a frame. Over the past decade, it is Deep Learning that has seen AI-based applications sharply increasing, such as self-driven cars and deep space exploration. Since such applications are used to control safety, it is fundamental to investigate their reliability and to understand how to prevent failures from occurring.

Amongst the reliability challenges, the most crucial one is the difficulty in identifying and predicting the effect of a hardware fault, although this can be done using neutron beam testing alongside other techniques. A further challenge is that the complexity and performance requirements of the hardware to execute DNNs is so high as to make the design of dedicated fault-tolerant devices extremely difficult and costly. The only available devices that can execute DNNs at speed are commercial chips, which are not specifically designed for safety-critical or space applications. It is therefore very important for there to be efficient hardening solutions, which means reducing the chance of vulnerability. Accelerated beam experiments, such as those on ChipIr, are the best way to accurately measure the radiation sensitivity of a device, including an AI accelerator.

Single Event Effects evaluation can be conducted for applications both in space and here on Earth (e.g. self-driving cars). Given the importance of AI reliability for terrestrial applications, it is fundamental to measure the error rate of AI accelerators for the Earth's radioactive environment. To do this, facilities that deliver a terrestrial-like flux of neutrons, such as ChipIr, LANSCE, or TRIUMF are of essential importance. These facilities provide the ability to expose the AI accelerator devices to neutron flux that therefore closely mimics the real world, but up to a billion times more intense. Using them researchers can gather statistically significant data on the single event effects in a short time. ChipIr was designed specifically to ease the test of electronic devices. Thanks to the focused beam and the infrastructure available for the users, the test of complex computing systems is very effective and can help in drawing fundamental conclusions about the sensitivity of modern devices to terrestrial neutrons.

Due to the intrinsic probabilistic nature of neural networks, it is very hard (if not impossible) to estimate the accuracy of a machine learning model. Nonetheless, with careful software design and hardware characterisation, it is possible to mitigate the effect of faults and significantly reduce the probability that neutron-induced misclassifications or misdetections will happen.

To implement an efficient and effective hardening solution, it is necessary to study how both the model and the hardware respond to radiation. By identifying the faults that are more likely to cause misdetections or misclassifications, it is possible to design specific solutions to reduce the chance of an error, improving the safety of these systems.

“The way we program and execute codes is ever-changing", explains Paolo. “On one hand the paradigm of algorithm itself is shifting to neural network-based computation and, on the other hand, the computing architecture complexity is exploding to deliver sufficient performance. In this scenario the probability of hardware faults increases, the propagation of such faults can spread the effect to multiple operations, and the effect on the final output is highly unpredictable. An experimentally based reliability study becomes then essential to ensure a realistic understanding of the phenomena, so to design hardening solutions that are effective once implemented in the field."

Read the paper in full here: Artificial Neural Networks for Space and Safety-Critical Applications: Reliability Issues and Potential Solutions | IEEE Journals & Magazine | IEEE Xplore DOI 10.1109/TNS.2024.3349956